Search Autocomplete System

As you enter your query in the search box on Google or while shopping on Amazon, various matches for your search term are displayed to you. This functionality is commonly known as autocomplete or incremental search. The functional requirements are straightforward, as we type we want out service to return top k suggestions based on our query. As for the non-functional requirements we're looking at fast response time, relevant and sorted results while also achieving High availability, Fault tolerant and High scalability system.

The most natural data structure for achieving fast quering is a Trie, below we have an algorithm which computes the top k results based on the search query.

class AutocompleteSystem {

class TrieNode {

char val;

boolean isWord;

Map<String, Integer> count;

TrieNode[] children;

public TrieNode(char val) {

this.val = val;

count = new HashMap<>();

children = new TrieNode[27];

}

}

class Trie {

TrieNode root;

public Trie() {

root = new TrieNode(' ');

}

public void insert(String query, int frequency) {

TrieNode curNode = root;

for (char c : query.toCharArray()) {

int index = c == ' ' ? 26 : c - 'a';

if (curNode.children[index] == null) {

curNode.children[index] = new TrieNode(c);

}

curNode = curNode.children[index];

curNode.count.put(query, curNode.count.getOrDefault(query, 0) + frequency);

}

}

public TrieNode search(String prefix) {

TrieNode curNode = root;

for (char c : prefix.toCharArray()) {

int index = c == ' ' ? 26 : c - 'a';

if (curNode.children[index] == null) {

return null;

}

curNode = curNode.children[index];

}

return curNode;

}

}

class WordInfo implements Comparable<WordInfo> {

String word;

int count;

public WordInfo(String word, int count) {

this.word = word;

this.count = count;

}

public int compareTo(WordInfo that) {

return this.count == that.count ? this.word.compareTo(that.word) : that.count - this.count;

}

}

Trie trie;

String prefix;

public AutocompleteSystem(String[] queries, int[] times) {

trie = new Trie();

prefix = "";

for (int i = 0; i < queries.length; i++) {

trie.insert(queries[i], times[i]);

}

}

public List<String> input(char c) {

List<String> result = new ArrayList<>();

if (c == '#') {

trie.insert(prefix, 1);

prefix = "";

return result;

}

prefix = prefix + c;

TrieNode node = trie.search(prefix);

if (node == null) {

return result;

}

PriorityQueue<WordInfo> maxHeap = new PriorityQueue<>();

for (String key : node.count.keySet()) {

maxHeap.add(new WordInfo(key, node.count.get(key)));

}

for (int i = 0; i < 3 && !maxHeap.isEmpty(); ++i) {

result.add(maxHeap.remove().word);

}

return result;

}

}

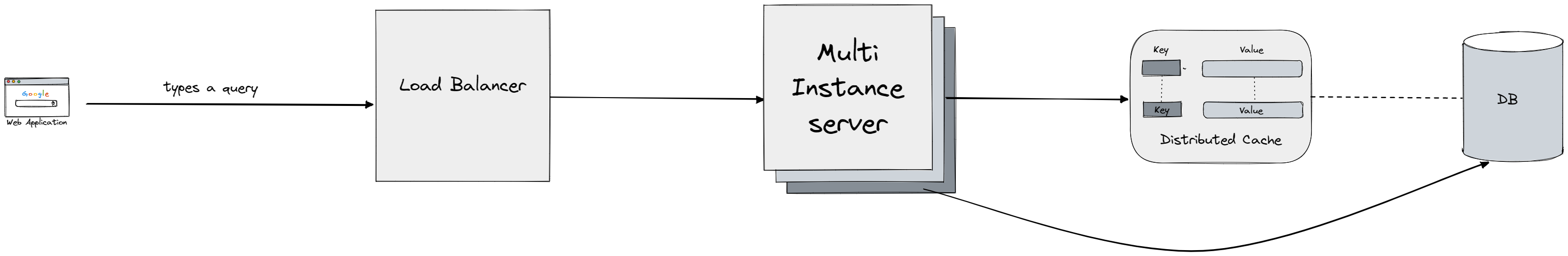

This code implements an autocomplete system that provides top 3 search results for a given prefix. The system uses Trie data structure to store the words and their frequencies. The Trie is constructed using queries and times as inputs. The input() method appends characters to the prefix and performs a search in the Trie for the prefix. We assume here we would be ending our input with some special character # but of course this can be modified to insert the prefix after some inactivity or when user selects one of the suggestions. If the prefix is found in the Trie, the frequencies of all words with the prefix are collected in a priority queue, which is sorted based on the frequency and lexicographic order. The top 3 elements of the priority queue are returned as the result. To build the trie we have time complexity of O(nl) where n is the number of queries we currently have stored in some storage, and where l is the average length of a query. Of course quering directly a trie in a real time system would be time consuming and we wouldn't achieve fast response time especially because we will be dealing with billions of queries (and for each query we have O(l + klogk) where l is the time to found a query in trie of length l and then we have k words that match our query in our count hashmap leading to k operations on the heap O(klogk), actually we need even more time since for the ordering purposes we compare strings which requires linear time) so we do a time-space trade-off and we periodically create a trie and store in a key-value store/distributed cache Key-Value Store where we map a prefix to a key, and have a list of sorted (based on frequency) mathing suggestions. We will have worker nodes which will for each time interval defined (e.g. 1min, 1hour...) create/update a trie. For permanent storage we will store the trie in a database like Amazon Aurora.

The search query is initially sent to a load balancer. The load balancer then directs the request to the API servers. The API servers access the Trie Cache to retrieve trie data and create autocomplete suggestions for the client. If the data is not found in the cache, it is replenished to the cache for future requests. This ensures that subsequent requests for the same prefix are answered quickly. However, cache misses may occur if the cache server is out of memory or offline. To improve speed, the following optimizations are proposed: using AJAX requests for web applications, which allows for quick sending and receiving of request and response without refreshing the entire page, and utilizing browser caching, which saves the autocomplete suggestions in the cache for a set period of time to allow for faster retrieval in subsequent requests. Similar to Google search engine, this cache is specific to a single user and is not shared. We're using smart sharding/partition by analyising query patterns (number of queries starting with 'a' is equal to the number of queries starting with 'x', 'y', 'z' for example so we can distributed it in two shards/partitions) both for the cache and database to distribute the load uniformly since the trie can become too large to be stored in single db/cache instance.

To support multiple language we can just extend our set of allowed characters (for the algorithm above only english lowercase letters) to Unicode (supporting all characters we might need) and we could store different tries for different countries since the query patterns might be different. The last questions is how do we actually create the frequency map for the queries in real-time system? Since we have a top k problem which lies on top of counting occurences we can completely utilize the analytics aggregation with lambda (slow and fast path) we implemented in Tiny URL which output will be top k searched queries which we can then take into our workers node which run the algorithm above.